This article will talk briefly about the foundations of data integration, examine how they have been built upon over time, and argue that highly specialized integration solutions will soon lose relevance to comprehensive, architecturally agnostic integration solutions that serve a wider variety of use cases.

It will end with a list of what to look for when purchasing a future-proof data integration solution.

NASA data center. February 1990.

NASA data center. February 1990.

Over the last 40 years, the concepts and practices behind data integration have become tremendously complex.

In the 1980s, organizations integrated their data in just one way, and for one reason: with in-house solutions to collect data in a database. Today, they integrate data in many ways, using a wide assortment of specialized solutions, which connect a seemingly infinite number of systems.

Ironically, in the face of this ballooning complexity, the process of data integration—if it should remain feasible at all—must be made simpler.

Data Integration Solutions Before the Cloud

At the heart of data integration—both 40 years ago and today—is the extract, transform, load (ETL) process. Exactly as described, data in this process is extracted from sources, transformed (or cleansed and customized), and loaded into a database.

In the 1980s and early 1990s, organizations were performing ETL integrations to bring transactional and operational data from applications into databases, where it could be stored and updated. The primary aim of these databases was housekeeping; they were designed to keep the current snapshot of an organization, rather than for putting data into profitable use.

Then, in the mid-1990s, a new type of database was designed to solve this problem: the data warehouse. Data warehouses were optimized for storing the complete analytical history of an organization, and supported complex manipulations of data, like joining, aggregation, and computation of what-if scenarios. To get the data into the analytics database, it needed to be copied from the storage database.

Collectively, ETL refers to the processes of gathering data from disparate sources in a storage database and copying it to an analytics database for use.

At this time, there was no standard methodology for how to build ETL solutions, so the grand majority of them were built on an ad-hoc basis and by in-house data personnel. They were hard to maintain and, since their implementation was so specific to each case, they could not be reused in other contexts.

Data Integration Solutions in the Cloud Era

In the pre-cloud era, ETL was the only de facto type of data integration; it was an essential process for businesses, and the resources to do it were both expensive and scarce.

Naturally, software companies realized an opportunity here, and the market for bought ETL tools began to expand.

By 2003, there were over 200 ETL solutions on the market that were specialized for different integration cases. When cloud computing entered the mainstream in the mid-2000s, even more ready-made data integration solutions entered the market. Today, there are too many to even count.

These solutions cater to use cases that go far beyond ETL. Indeed, as more devices have become connected to the Internet and the Internet of Things, and as storage has become more affordable, enterprises are demanding more data and more dynamic integration scenarios to maintain their competitive edge. As a result, the concept of centralized data centers is becoming outdated. This is confirmed by recent research, which shows that 81% of companies today have already developed or are in the process of developing a multi-cloud strategy.

ETL is still a major part of data integration, but it’s one process among many. Other common types of integration include:

- ELT. ELT is a variation of ETL in which the data is loaded into a target system before transformation. This approach is popular when dealing with large volumes of data because it enables the “dirty” data to be dumped into a database quickly, and cleaned later as needed.

- CDC (change data capture). This is a type of data replication that is focused on replicating specific data in near real time, in order to quickly update data across systems.

- Event-based integration (ESB / iPaaS). This is actually a very simple type of integration that sends simple data “events” between operational systems. For example, when a customer makes a payment in your payment gateway, you can shoot this to your CRM, so the payment information will appear in that customer’s profile

- Reverse ETL. Often considered the last mile of the modern data stack, reverse ETL is the copying of enriched data (often first-party data) from a data warehouse into operational tools like CRMs, in-app messaging systems, and marketing automation platforms. This gives the users of these tools direct access to custom insights.

Without question, accommodating such complex integration scenarios with an in-house team is nearly impossible. So, businesses today typically use a variety of bought, specialized integration solutions to serve each of these scenarios and others.

Data Integration Solutions of Tomorrow

Because data is such a core function of business today, many of the data integration solutions on the market are designed to be operated by professionals who have no knowledge of coding, like marketers, salespeople, and support agents.

There is good reason for this. A lot of companies don’t have dedicated data personnel, or the budget to hire them. And in companies that do have dedicated data personnel, the teams that actually need insights from data don’t always have the time or capacity to liaise with the data personnel to get them.

This, I believe, is a strong indication of the direction of development of data integration solutions.

Pretty soon, non-technical professionals on business teams won’t want to be asking themselves which tool they need for ETL, which tool they need for ELT, or which tool they need for reverse ETL. They will simply want to pick a data source, pick a data destination, and send.

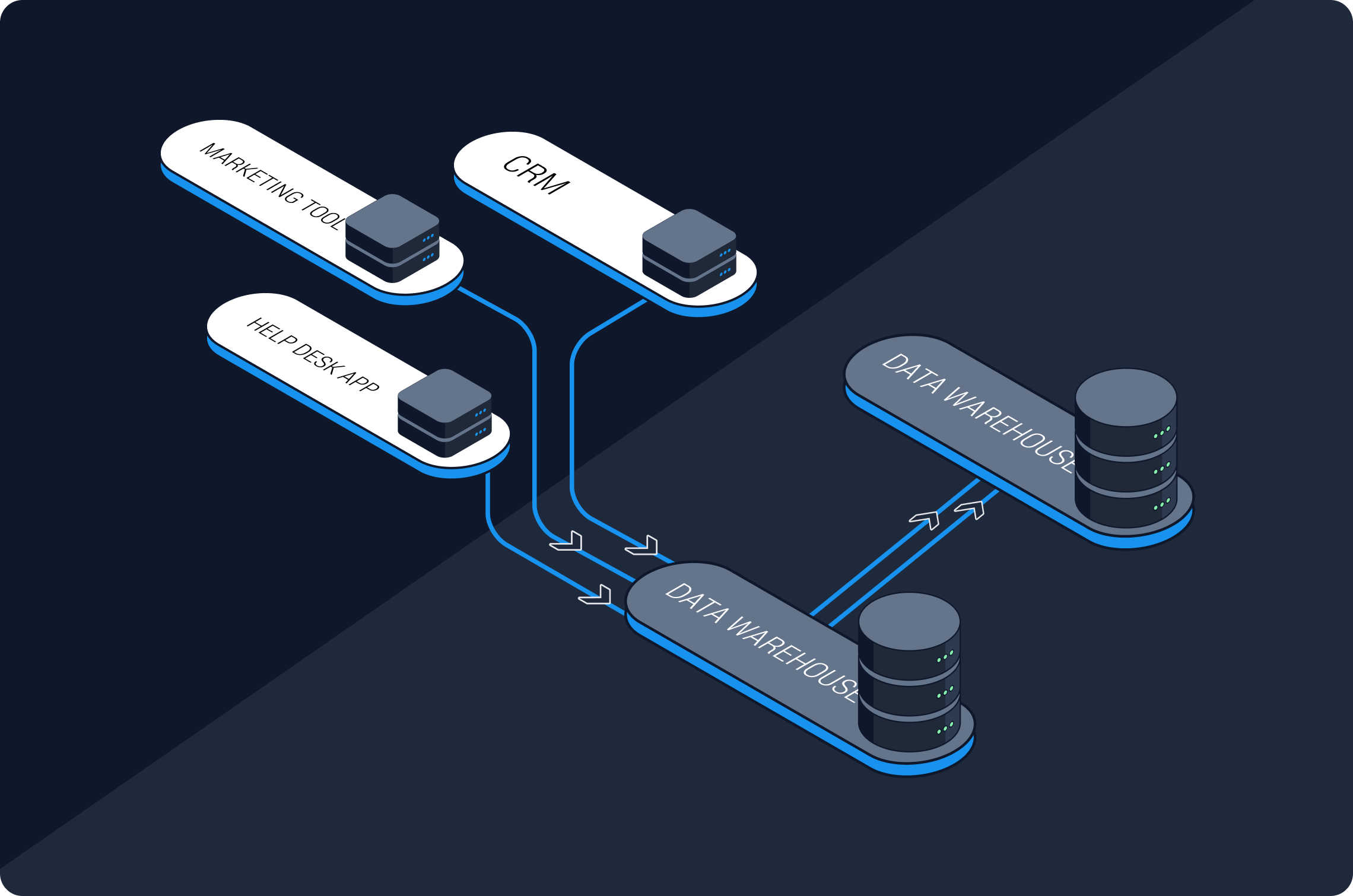

The enterprise data integration solutions of tomorrow, therefore, will need to be architecturally agnostic. In simpler terms, this means they will need to be able to connect any two systems, regardless of where they sit in a data architecture.

For solution vendors, supporting any-to-any connectivity will be a never-ending job. But for end users, this will make integrating data easier and simpler than it’s ever been.

For a real-life example of how a company can use a single integration tool to move data from end to end for a fraction of the cost and labor, see our case study on Livesport.

What to Look for in a Future-Proof Integration Solution

Naturally, there will probably always be a place on the market for specialized solutions that only support specific integration scenarios. But more and more often, we will see companies adopting a unified solution that supports all integration scenarios and users, regardless of technical ability. Below are some important things to look for when adopting a unified solution.

- Architecturally agnostic (supports the following integration types):

- ETL/ELT

- Database replication (including CDC)

- Reverse ETL

- End-to-end integration of online sources with dashboarding applications

- Event-based integrations

- Low- or no-code: It should be easy for non-technical professionals to use; otherwise, they will depend on engineers for access to data.

- Inbuilt data quality mechanisms: The high cost of poor data quality for businesses has already been proven. A future-proof data integration solution should therefore have embedded mechanisms that prevent poor-quality data from ever flowing downstream. These include anomaly detectors, rule-based filters, and detailed monitoring and alerts.

- Large portfolio of connectors: Companies are adopting more SaaS apps every year, so it’s important that a vendor’s connector portfolio reflects this.

- Fully managed: So your business teams will never have to start mornings with a broken dashboard, and your in-house data team, if you have one, will never have to worry about API changes or pipeline maintenance.

- Tight security: The vendor themselves should be SOC 2 Type 2 certified, and offer inbuilt mechanisms for users of the platform to keep data secure, such as:

- Multi-factor authentication

- Custom data encryption keys

- Optional exclusion of personal identifiable information from datasets

- Optional SSH tunneling

- Data masking/hashing ability

- A logging system

Data integration solutions that meet these criteria will likely be able to support your organization at any and all phases of data maturity.

|

Integrate Your Data with Dataddo ETL, ELT, reverse ETL. Full suite of data quality features. Maintenance-free. Coding-optional interface. SOC 2 Type II certified. Predictable pricing. |

Comments