Artificial Intelligence (AI) is reshaping industries and economies worldwide. Harvard Business Review estimates AI will contribute $13 trillion to the global economy in the next decade.

The success of AI initiatives depends largely on the quality of training data. However, the data is often available in isolated silos or distributed across various departments. This is where data integration helps. It combines data from multiple sources for a unified view. This data then fuels AI models that deliver accurate results.

Let’s explore why data integration is important for AI success, as well as tips for how to successfully integrate data. Click to skip down:

- The importance of effective data integration for AI

- Data integration challenges for AI

- Benefits of data integration for AI

- Real-world case studies: data integration impact on AI initiatives

- Prime your data for AI by integrating it with Dataddo

The Importance of Effective Data Integration for AI

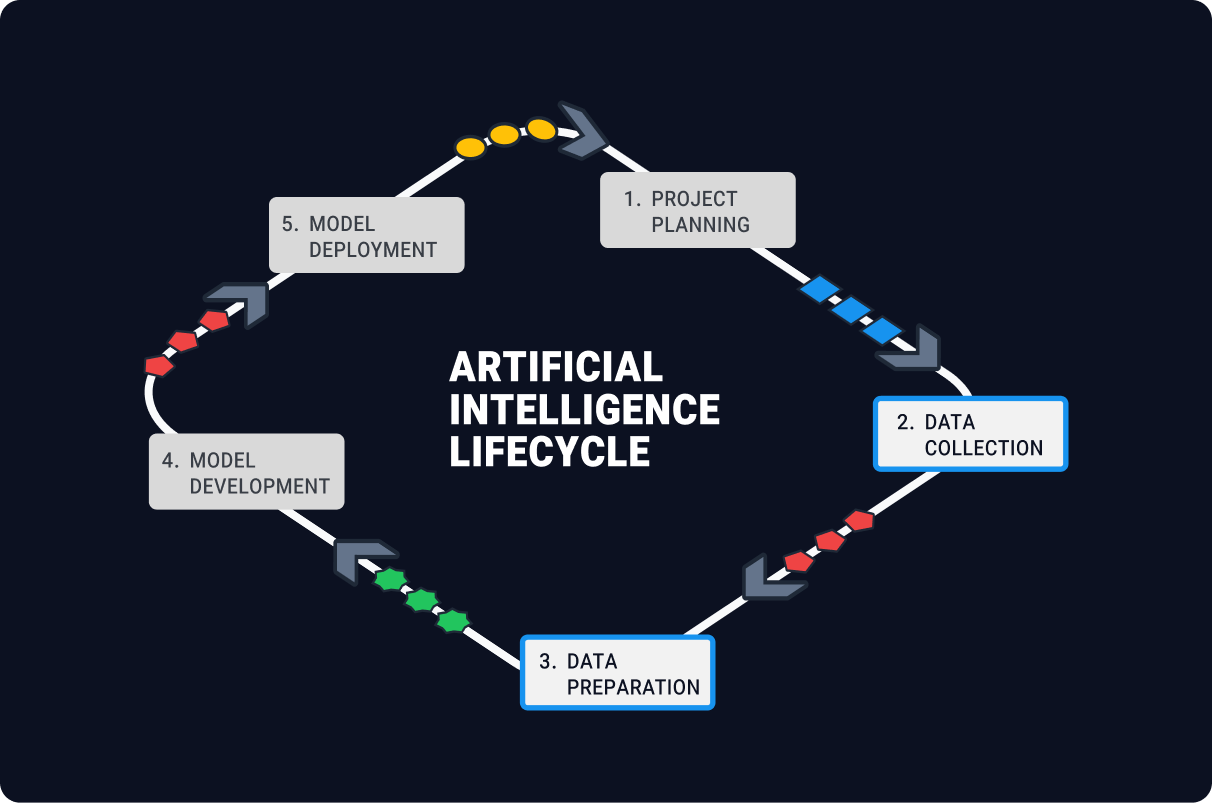

AI models rely on data for their training and development. This includes supervised and unsupervised learning, where data is used to teach AI models how to make predictions and identify patterns.

In the context of AI, the saying "garbage in, garbage out" shows the importance of quality data. If poor data is used, the AI model outputs will also be flawed, leading to inaccurate predictions and decisions. More focus should therefore be put on the data collection and preparation phases of the AI lifecycle.

According to McKinsey, “industry leaders using their data to power AI models are finding out that poor data quality is a consistent roadblock for the highest-value AI use cases.”

Data Integration Challenges for AI Initiatives

Why do teams struggle with data quality? Multiple challenges stand in the way of efficient data integration for AI models:

- Data Silos: This is the main data integration challenge. Data is often stored in isolated systems, making accessing and using data for AI projects difficult.

- Data Heterogeneity: AI models demand data from multiple sources, where it comes in heterogeneous formats and structures. The data must be unified before it can be processed by machines.

- Data Quality Issues: Issues like missing values and inconsistencies can negatively impact initiatives. Statistics show that 87% of data science projects fail because of poor data quality and labeling.

- Security and Compliance Concerns: Privacy is a major concern, considering the ability of AIs to extract hidden insights from data. Personal identifiable information (PII), for example, should always be filtered from any training datasets (or hashed).

If not addressed properly, these challenges can lead to:

- Inaccurate predictions and insights

- Biased decision-making

- Compliance issues

- Reduced trust in AI-driven outcomes

Benefits of Data Integration for AI

Data integration solves the main problem of data silos by bringing data together from multiple sources and eliminating data duplications.

Data integration also offers various other benefits for AI models, including:

- Improved Data Quality and Consistency: By unifying data from disparate sources, data integration tools ensure that clean and standardized data is used for AI training. This, of course, enhances the reliability of AI models and results in more accurate outcomes.

- Enhanced Data Accessibility: Automated data integration enhances data accessibility and discovery. AI systems can easily find and use relevant information when all the data is synced to one place.

- More Accurate AI Models: If AI models can access data from various sources, they can better understand a problem and propose a solution or make a prediction.

- Reduced Cost: With automated data integration processes in place, teams can spend less time on data collection and preparation and more time on actual model development, thus shortening time to market.

Real-World Case Studies: Data Integration Impact on AI Initiatives

Let's look at two real-world cases of data integration for AI initiatives—one success and one failure.

Success Story: Netflix's Recommendation Engine

Netflix faced the challenge of delivering personalized content recommendations to its users. To address this issue, they used a data integration platform, which collects and cleanses data from various sources, such as user interactions and external APIs.

With this diverse data, Netflix's AI recommendation engine analyzes users' viewing habits and preferences. It also considers external factors like weather. This enables Netflix to offer highly accurate and personalized content suggestions to each user.

As a result, Netflix has seen increased user engagement, with 75-80% of viewers following its recommendations.

Failure Story: Zillow's AI-Powered Home Valuation

Zillow attempted to use AI to predict home values. Their problem, however, was that they were relying on historical data. They did not take into account real-time market trends or local factors. This led to the AI model overestimating home values in certain regions.

The consequences were severe—Zillow experienced financial losses and reputational damage, and had to cancel the initiative.

This case highlights the importance of recent data for AI models, especially when dealing with fast-changing markets like real estate.

Prime Your Data for AI by Integrating It with Dataddo

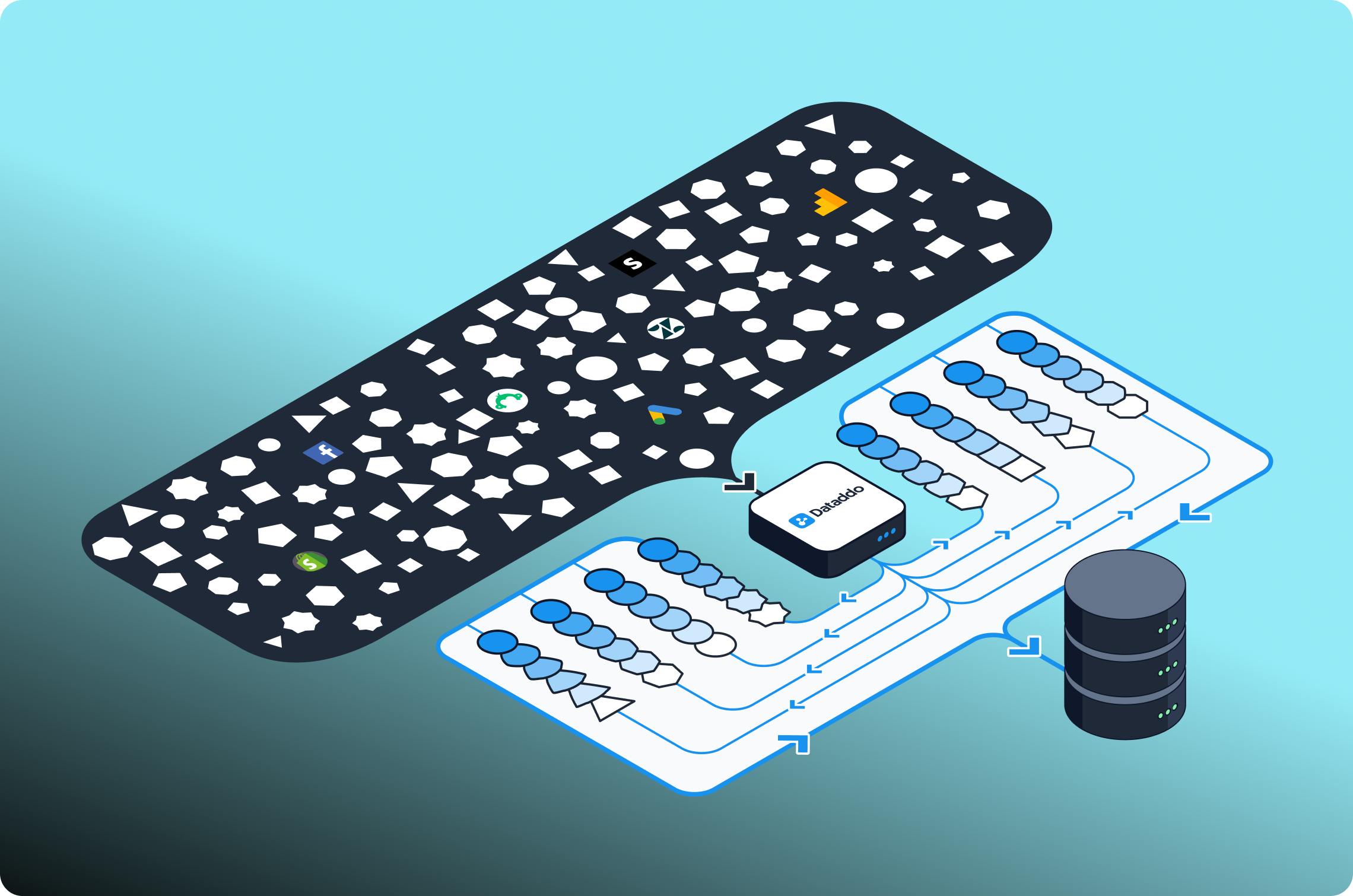

Dataddo is an automated data integration platform that can synchronize data from any sources to any destinations, ensuring that your AI model has automated access to a complete and up-to-date pool of data.

It offers several features that are essential for the preparation and cleansing of datasets for AI:

- Embedded pre-cleansing capabilities ensure that any extracted data is machine-readable and ready for consumption by an AI model.

- The Data Quality Firewall, configurable per column in a table, ensures that no unwanted data enters your downstream systems.

- Optional exclusion and masking of sensitive information from datasets helps keep your AI initiative compliant.

- Dataddo's engineers fully maintain all pipelines, so you never have to worry about them breaking in the middle of the night.

- Detailed monitoring and logging provide deep observability into what data is being moved and how.

- Vast library of connectors. Don't see the one you need? Let us know and we'll build it.

In short, Dataddo accelerates data collection, minimizes data preparation, and sets the essential standard of data quality that AI needs to succeed.

Sign up for a free, fully functional trial today.

|

Connect All Your Data with Dataddo ETL/ELT, database replication, reverse ETL. Maintenance-free. Coding-optional interface. SOC 2 Type II certified. Predictable pricing. |

Comments