LLMs don’t just need data—they need the right data. That means governed, fresh, and context-rich information: not just raw facts, but the relationships, metadata, and semantic structure that give those facts meaning. If you’re serious about building AI agents, copilots, or internal assistants that are trustworthy and scalable, the quality and completeness of the data you feed them will make or break the experience.

The Problem: Your Data Isn’t AI-Ready

Most businesses sit on a mountain of siloed operational data—spread across CRMs, ERPs, marketing tools, databases, and spreadsheets. Feeding this data directly into an LLM without cleaning, aligning, and contextualizing it leads to hallucinations, inconsistencies, or outright failures.

Even when a company has a data pipeline in place, it’s often designed for dashboards, not LLMs. That’s a problem. Business intelligence tools can tolerate a few missing dimensions or inconsistent schemas. LLMs can’t.

The Solution: Governed Data Activation for AI Tools

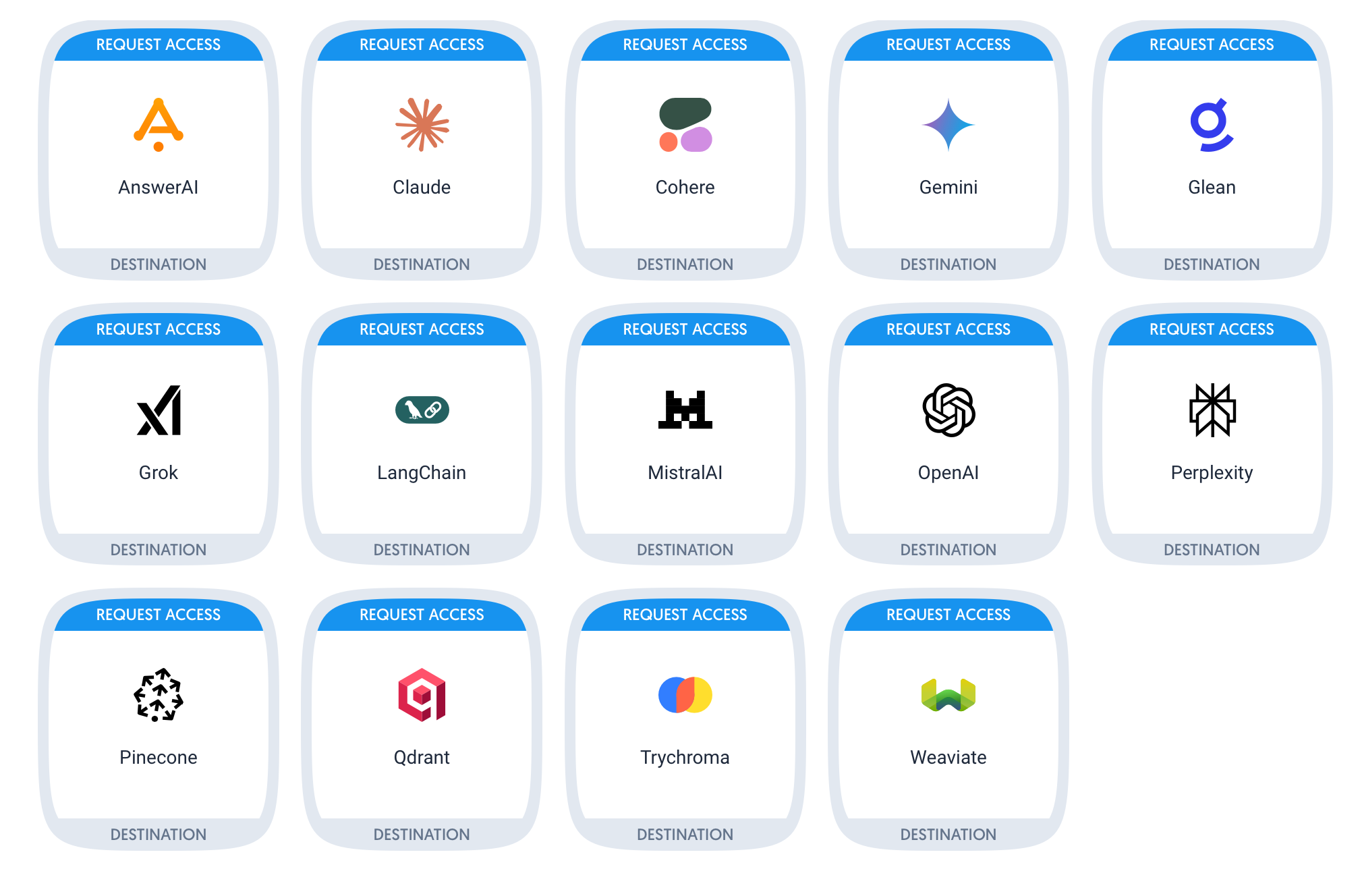

Dataddo now lets you send enriched, structured, and regularly updated data directly to your AI stack—including tools like:

-

OpenAI, Claude, Gemini, Mistral, Perplexity – for LLM inference and RAG pipelines

-

LangChain, LlamaIndex – for agent frameworks

-

Pinecone, Qdrant, Weaviate – for vector databases

-

AnswerAI, Trychroma, Grok, Glean – for copilots and AI-enhanced enterprise search

You don’t need to build and maintain these integrations yourself. You don’t need to write and test extraction code. With Dataddo, the data flows directly from your sources—with schema mapping, metadata enrichment, and optional PII hashing handled along the way.

PII Hashing and AI Readiness

Many LLM pipelines require the use of operational data that includes sensitive information—names, emails, account numbers. Dataddo allows you to hash or exclude personally identifiable information (PII) before sending it to downstream tools, helping you stay compliant while still training and operating powerful AI agents.

The Outcome

-

Launch AI-powered tools faster, without waiting for custom pipelines

-

Feed your models richer context, so they make better decisions

-

Reduce maintenance, API breakages, and manual data wrangling

-

Deliver governed data without overburdening your tech team

Curious how this would work with your AI stack?

Book a call and let’s explore your use case!

Comments