Hi, I’m Petr, the CEO of Dataddo, a global data integration company. Every year, I share what I consider to be the most important big data trends for the year ahead, along with actionable takeaways. And every year, it gets harder to come up with a list that’s different from the previous years’ lists.

This is because, in general, data management trends don’t change radically year on year; they just evolve. Moreover, no matter how many trends I list, they always fall into three main categories: data quality, data security, and data governance.

So, for 2024, I will talk about developments in these three core areas of data management, rather than give longer lists of standalone trends like I did in 2023 and 2022.

Before I start, let’s get one more thing out of the way: in the last twelve months, AI has unquestionably had the biggest impact on how enterprises manage data.

The implications of AI are so far-reaching that it doesn’t make sense to talk about it as its own trend. So, for this article, I will mention the impact of AI in each section.

1. The Importance of Data Quality Is Becoming Painfully Clear

Data quality has always been important. But, in the last year, the rise of AI has greatly amplified its importance.

The flashy part of AI initiatives is, understandably, the data models. However, a data model can only be as good as the data it’s trained on. Many organizations are learning this the hard way.

According to McKinsey, the highest-value AI use cases are consistently hindered by data quality issues like incomplete data mappings, missing data points, and system incompatibilities.

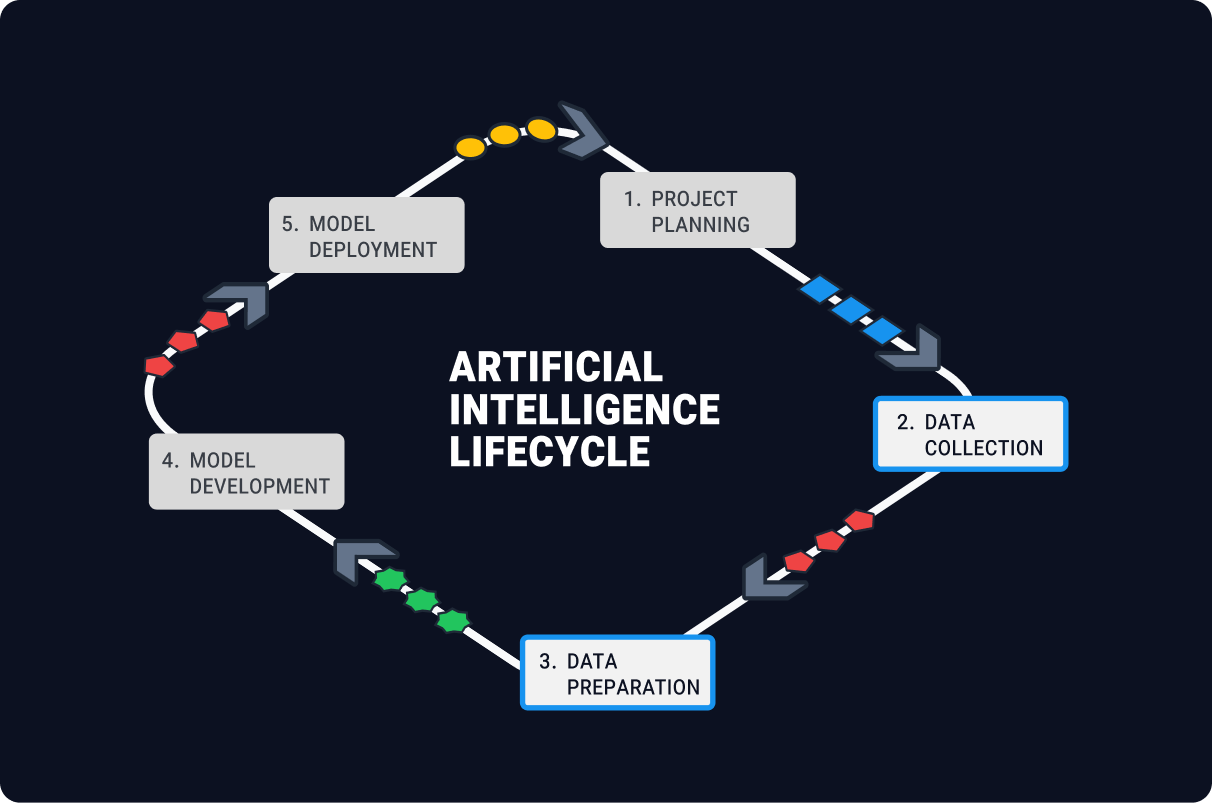

To ensure the success of any data initiative, in particular AI initiatives, a strong focus should be put on proper data collection and preparation before model development.

In practice, this means two things: implementing a comprehensive data governance policy, and putting the right technological solutions in place for identifying and solving data quality issues as early as possible in the data lifecycle.

Since most organizations that take data quality seriously already have governance policies in place, it’s the technological solutions that tend to provide the advantage that many companies are lacking. Such solutions include:

- Data integration tools for standardizing data and flagging up issues at the earliest point in the data lifecycle.

- Data profiling and filtering tools to keep anomalies, outliers, duplicates, etc. out of downstream systems.

- Data monitoring and lineage tools to track the flow of data across a stack.

- Dataset labeling tools to help data models recognize the context of datasets.

More and more often, we are seeing AI-based functionality embedded in these tools. For example, there are now data integration tools capable of recognizing and hashing sensitive information before passing it downstream, as well as data lineage tools that can produce automatic visualizations of data flows.

Key takeaway: AI is forcing a new approach to data quality that’s based just as much on technological solutions as it is on organizational solutions. Companies that want to keep data quality high in 2024 will need to use both to ensure issues are solved during data collection and preparation.

2. Data Security and Compliance Should Be Taken More Seriously Than Ever Before

Data security laws are tightening globally and it’s getting harder to collect customer data without breaking the rules. One clear symptom of this is Google’s plan to discontinue third-party cookies in 2024.

Even without AI in the picture, I believe the importance of data security would still be growing. But the use of AI has brought a host of new security and compliance risks. These include:

- Risk of personal identification. Personal identification can be unintentional and non-malicious (e.g., when analysis of data accidentally leads to identification of a subject), or malicious (e.g., when deliberate attacks on AI systems lead to the leakage of sensitive information).

- Risk of inaccurate decision-making. Inaccurate decisions (e.g., as a result of poor data quality) can have harmful political, social, and legal consequences.

- Risk of AI systems being non-transparent. Many deep learning algorithms lack the ability to explain how decisions have been made, leading to public distrust in things like hiring or credit scoring decisions. Inaccurate bias in these areas can harm organizations and individuals.

- Risk of non-compliance with best practices and regulations. Excessive collection or retention of data, incorrect or outdated data, lack of proper mechanisms for legal consent—any of these could result in hefty fines and damaged reputations. And they often do. Since 2018, the number of GDPR fines issued every month has been growing steadily, with the biggest one ($1.3 billion) being issued in 2023 (to Meta).

Another major compliance issue that will color 2024 is ESG (environmental, social, and corporate governance). Recently, the International Sustainability Standards Board (ISSB) issued two ESG standards, IFRS S1 and IFRS S2, which must be followed by dozens of countries and jurisdictions around the world (including the EU) starting in 2024. The US is not one of these, but the American Securities and Exchange Commission (SEC) will soon raise the bar for ESG compliance, putting companies at unprecedented risk of violation.

In response to increasing security threats and stricter regulations, we are seeing more features and technologies for protection and compliance embedded directly in data management tools, like deeper access controls, additional data residency locations, and more hashing/masking options.

Key takeaway: On one hand, organizations will need to be all the more intentional about what data they collect and how it’s processed; new tooling and technologies will help offset the complexities of keeping data secure. On the other hand, organizations will need to make sure that any service providers they use are taking the equivalent steps to protect customer data; SOC 2 Type II certification is a good benchmark of diligence.

A side note: Google’s discontinuation of third-party cookies for enhanced consumer privacy will present a considerable challenge for digital marketers, who depend on them to track conversions. First-party data will therefore become all the more important in 2024, and marketers will need to start using it more efficiently if they want to remain competitive (one way is to automate offline conversion imports to services like Google Ads).

3. The Modern Data Stack Is Becoming Too Composable; For Many, It’s Time to Consolidate

As I implied in trends #1 and #2, data infrastructures are only getting more complex. The number of SaaS apps businesses are using rises every year, and 81% of companies already have a data strategy that involves multiple clouds.

This makes sense, because adopting specialized solutions to support things like data quality and data security is—and will continue to be—absolutely essential. But I also think that the modern data stack is becoming a bit too composable.

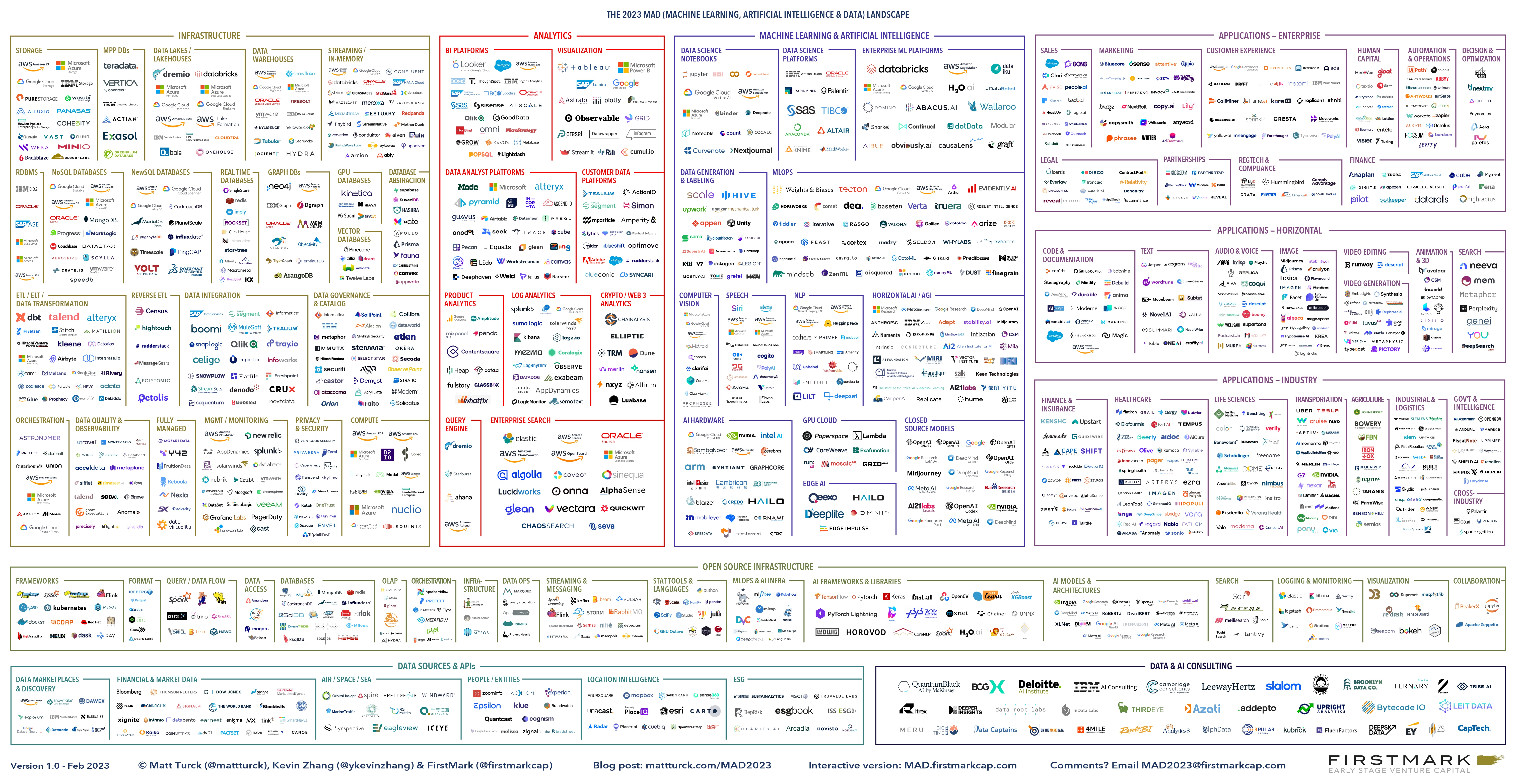

Have you ever seen a data landscape image like the one below? Props to its creators, because it shows what the modern data stack is actually capable of. But if you had just one tool in each category, your stack would be quite fragile. And many companies do.

Source: 2023 MAD Landscape by FirstMark

Source: 2023 MAD Landscape by FirstMark

As the CEO of a data integration company, I know from experience that one way to combat fragility in a data stack is to invest in some kind of consolidated, central connectivity between all its elements.

All too often, organizations use separate tools to connect the elements of a data stack—ETL/ELT tools to connect apps to databases, replication tools to connect databases to one another, and event-based integration/reverse ETL tools to connect apps to one another.

This, in my opinion, is a bridge too far. As data stacks grow more complex, having separate tools for each type of connectivity will become less practical, because there will be no central way to manage what’s connected to what.

Now, there are a handful of data integration platforms that offer all these integration technologies under a single UI, making connections much easier to govern. Dataddo is one such platform and I believe we will see more in 2024.

What about AI? We’re just now catching the first glimpse of AI-based functionality in data integration platforms, for things like error detection and recognition/exclusion of sensitive information. Moreover, AI is bringing the importance of data integration into the spotlight, since properly integrated data is a precursor to the success of any AI initiative.

Key takeaway: Having too many data systems without central connectivity increases the risk of data mismanagement. Using products that support—and allow transparent monitoring of—a variety of integration workloads can reduce this risk and promote modern data governance processes.

Offsetting Complexity with Innovation

I think data infrastructures will only ever get more complicated. And with them, the problems of data quality, security, and governance will, too.

But I also think that the technological solutions to these problems will only ever get more robust and accessible. Together with a comprehensive data governance policy and classic due diligence, they are what businesses will need to keep data quality high, prevent security incidents, and efficiently govern data from end to end.

Moving into 2024, I recommend that data leaders evaluate and incorporate these solutions into their data infrastructures, so that they can offset growing complexity with innovation.

|

Connect All Your Data with Dataddo One secure tool for all your data integrations, now and in the future. |

Comments